Has it been awhile? Yes. This is because I am busy/don’t really know how to summarize all the things that are going on/feel vaguely guilty about writing when there is always homework I am supposed to be doing.

But midterm season is ending, so.

Friday and Saturday I went to a hackathon- basically, you have a strict time limit to create some kind of computer code (or a hardware hack). I went without a team, so I got paired up with a few other freshmen from my school and a senior in high school from a nearby town. The idea that we came up with was to create a website that, if you gave it a tweet or writing sample, it would output whether the writing sample sounded closer to tweets Trump made or closer to tweets Obama made. I think that had I been working alone, I would have chosen something different, but I got a little bit overruled because the people in my group wanted to work on something political, so here we are.

We stayed up all night (I didn’t know caffeine chocolate is a thing, but it was, and a life changer. Because I never drink caffeine, I was UP all night.) My teammates left at 7AM, so for the rest of the day from 7AM until 4PM I was trying to make the whole thing work and connect all the parts and it was a mess. But I made friends with the upper-class mentors! And we had a big pancake breakfast! And overall it was really fun.

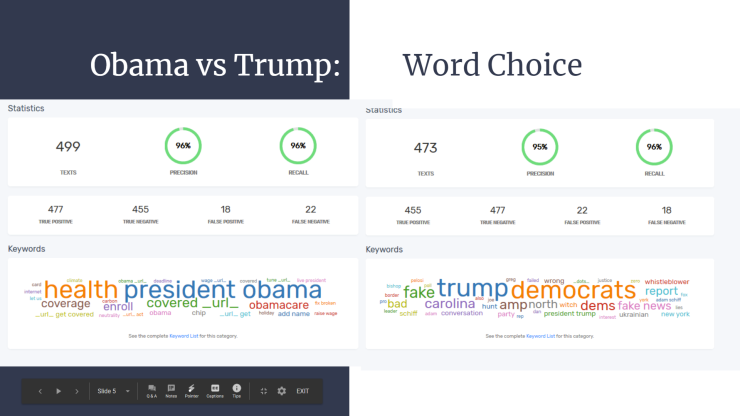

I was interested in natural language processing, so I put myself in charge of the neural network thing. We only had from Friday at 7pm until Saturday at 4pm, and I only know how neural networks work on image recognition and not language, so we ended out just using an API of one that we trained on our data. This is something I want to learn how it actually works at some point. Linguistically it is cool, but the one we trained really only did word frequency analysis (i.e. if you enter a word that is only used by Obama, you will get Obama with a high confidence, even if you type in something that you know that person does not agree with. In the example

you get a response of Obama with a confidence of .991 because although Trump tends to use the word bad, Obama tends to talk more about health insurance.) So this could be improved. But in like 21 hours it is pretty decent.

I’ve put in our submission text with some comments. The comments are probably obvious so are not marked.

What it does

The user inputs an average tweet from their account; it is assumed that the user will input a tweet-length sample of writing, with hashtags or links if desired. The language sample is tested against a neural network pretrained on 500 tweets each from Donald Trump and Barack Obama. The output of the program is a politician who matches the user’s language sample most closely along with the degree of confidence to which the predicted politician is correct. Of course, it is likely that percentages will be low, as the neural network expects only tweets from Obama or Trump; however, the language sample can come from the user’s own tweets or the tweets of those in a famous individual.

How we built it

Our main technologies used were HTML, CSS, JavaScript, and monkeylearn, an API for building a neural network. The neural network was initialized first to distinguish between 10 famous celebrities, athletes, and politicians. Due to the limitations of the neural network software, this amount of classes made it impossible to correctly distinguish each celebrity from one another. (This turned out to be because a lot of celebrities tweet purely pictures or videos or memes and then tag each other. It is really difficult for the word frequency analysis to figure this out. I’d like to do something in the future that uses relationship analysis (i.e. who is tagging who) to suggest who a user is, but that wasn’t in the scope of this project.) Then, the front end was created with HTML and CSS. Finally, the two parts of the framework were connected using JavaScript.

Challenges we ran into

One of the biggest issues we faced as a team was issues with the Twitter API. (THE TWITTER API DOESN’T WORK. I THOUGHT I WAS JUST BAD AT CODING, BECAUSE MY TEAMMATES WENT HOME AND I WAS TRYING TO DO THIS MYSELF ON NO SLEEP. I made friends with like every single mentor there, and they all tried to help, and they were so helpful, and we discovered that the Twitter API 1) is not supposed to be used with JavaScript 2) is supposed to be built on a private server and 3) doesn’t work very well. So I AM bad at coding but it’s not just me.) Originally, the app was meant to take in a user’s Twitter username and judge the user’s tweets based on their latest or most popular tweet. In addition, the bottom of the screen was meant to have a comparison in real time of the user’s Twitter feed and the Twitter feed of the celebrity with which they most closely matched. However, it was discovered very late that Twitter requires a separate server to use the API, and the API provided significant difficulty to work. In fact, it was recommended that JavaScript not be used to work with the Twitter API at all; an issue we faced after our code was built and the four of us had gained some familiarity with JavaScript.

Monkeylearn was the neural network API used to build a classifier for the neural network. Although the original plan was to build a classifier to classify 10 different celebrities, the limitations of the free plan on Monkeylearn left us with only 1000 possible training examples, and we were unwilling to pay $300 dollars for 2000 more training examples. 100 training examples per individual is completely insufficient for a neural network, and thus the project was cut to two celebrities. In addition, it was found that many celebrities tweeted videos or images and tagged others in the images. Future projects should look at these tags to build relationship models, and look at these images to determine personal likes. Because 3 members of our team were freshmen and one was a senior in high school, we ran into many issues in what we lacked in coding experience. Most of us were just beginning to learn JavaScript and HTML (This was the first time I used HTML and JavaScript, and look at how pretty our website is! It’s not THAT ugly! I’m so proud.), and this steep learning curve presented itself in many ways throughout the 24 hours. Nevertheless, we were proud of work we completed and the amount that we learned.

Accomplishments that we’re proud of

We were proud of learning to use JavaScript and HTML, and building a website that works as long as the user implements a good faith effort. We were also proud of, for 3 of us, completing our first hackathon, and working together on this goal.

Future Projects

It would be interesting to build a neural network by hand to avoid the limit on pretraining examples. Unfortunately, the project was chosen at Polyhack, and despite a few initial attempts to research neural language processing, it was ultimately decided to be impractical to build our own network from scratch in such a short period of time. This would allow more examples to be testing and thus increase accuracy in both the two candidates chosen and add additional celebrities. It would also be interesting to look at the choice of people tagged in posts and to analyze whether relationship circles could accurately predict a celebrity tweet. Finally, it would be interesting to analyze the ratio of pictures tweeted to the ratio of words as it compared to celebrity communication styles. Perhaps the data could be analyzed to determine a difference between political parties or occupations.

Here is the final product, with screenshots of the home screen (the image goes between the one shown and one of Trump) and tested on Donald Trump’s most recent tweet- because it was posted 31 minutes ago as of this writing, it was not part of the training set.

We ended up being a finalist! Which meant that I had to quick make a presentation in 5 minutes of our website and how we had made it, which would have been fine, except I found the statistics to tell how the neural network had judged between tweets, which I spent too long looking about (and probably talking about in my presentation. Here is that wordcloud (bigger words are more frequent; the left side is Obama and the right side is Trump.)

The project is kind of a mess and I will probably not work on it ever again, but it is on my GitHub if anyone wants to mess around with trying text, and I’m still very proud of my ugly work.